Introduction

Notes

Places

Croatia

North

Central

South

Sailing Logbook

Totals:

- As skipper: 693NM

- Totals: 793NM

Season 2023

- Distance: 215 NM

- Maximum speed: 5.9 kts

- Average speed: 3.9 kts

Season 2022

- Distance: 208 NM

- Maximum speed: 7.6 kts

- Average speed: 3.1 kts

Season 2021

- Distance: 183 NM

- Maximum speed: 9.9 kts

- Average speed: 4.3 kts

Season 2020

- Distance: 87 NM

- Maximum speed: 7.1 kts

- Average speed: 3.1 kts

Season 2019

- Distance 100NM 1

1 100NM sailed in baltic sea as training

2023

Marina Veruda 29.04.2023 - 06.05.2023

Trip summary:

- Distance: 215 NM

- Maximum speed: 6.08 kts

- Average speed: 3.88 kts

- Boat: Elan 40.1 Impression | Estela

- Year: 2020

- Drought: 1.80 m

- Length: 11.83 m

- Beam: 3.91 m

- Engine: 40 hp (29.8 kW)

- Fuel tank: 146 l

- Water tank: 400 l

- Classic mainsail

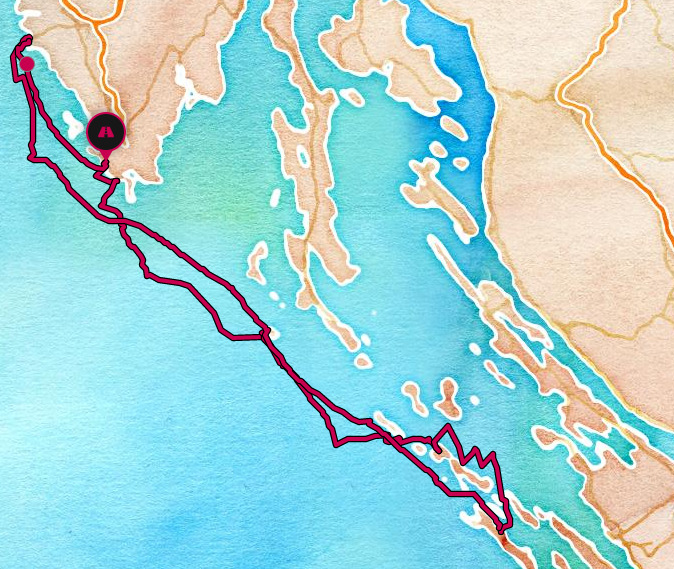

Map

Route stops and details:

Trip Gallery

2022

Marina Pomer 07.05.2022 - 14.05.2022

Trip summary:

- Distance: 208 NM

- Maximum speed: 7.5 kts

- Average speed: 3.3 kts

- Boat: Beneteau Oceanis 35 | DalMar

- Year: 2016

- Drought: 1.85m

- Length: 9.99 m

- Beam: 3.7 m

- Engine: 29 hp (21.6 kW)

- Fuel tank: 130 l

- Water tank: 330 l

- Classic mainsail

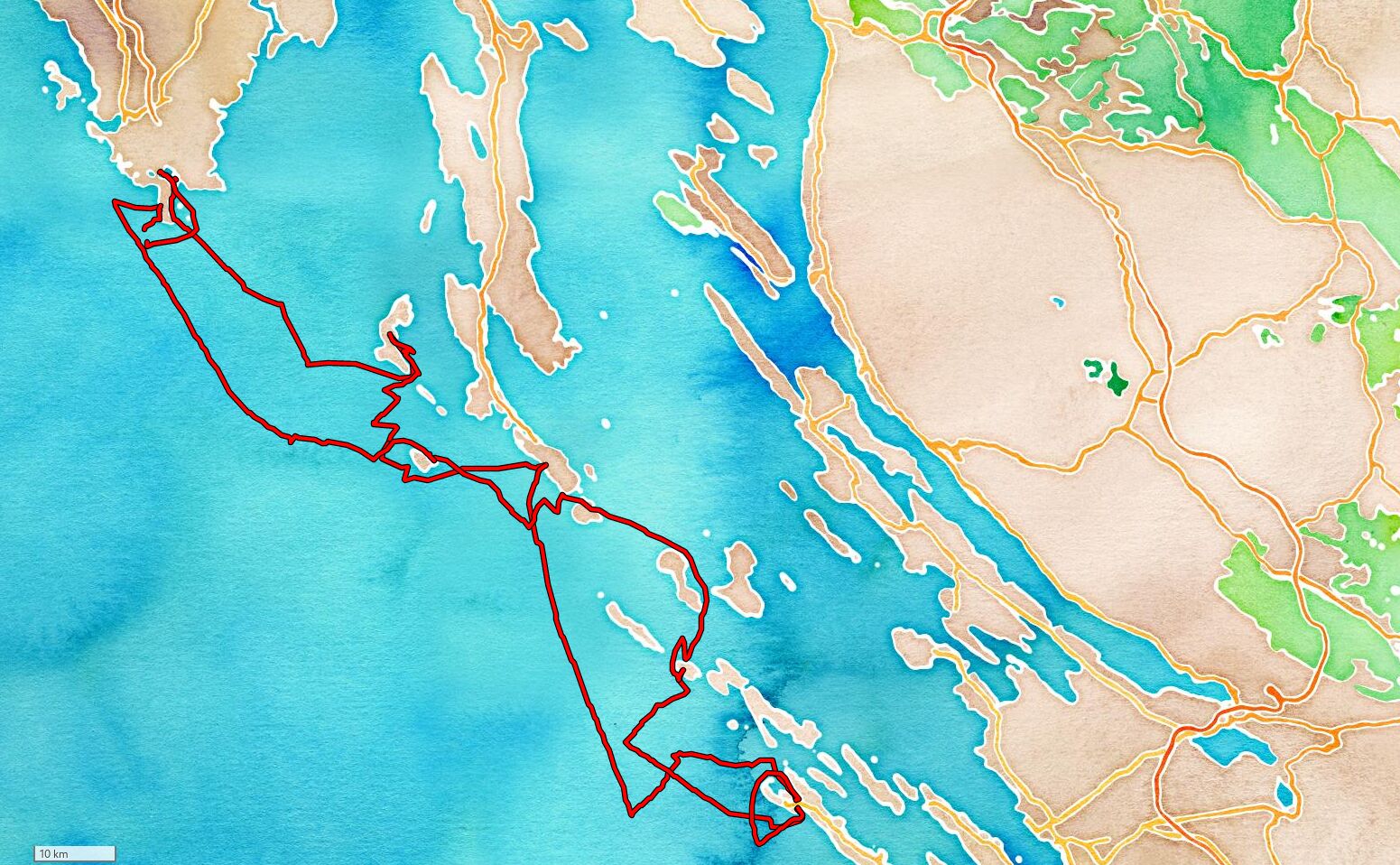

Map

Route stops and details:

- Start: Marina Pomer ↓

- End: Marina Pomer

- GPX

Trip Gallery

2021

Marina Frapa Rogoznica 29.05.2021 - 05.06.2021

Trip summary:

- Distance: 183 NM

- Maximum speed: 9.9 kts

- Average speed: 4.3 kts

- Boat: Dufour 430 | Calando

- Year: 2020

- Drought: 2.10m

- Length: 13.24 m

- Beam: 4.3 m

- Engine: 60 hp (44.7 kW)

- Fuel tank: 200 l

- Water tank: 380 l

- Rolling mainsail

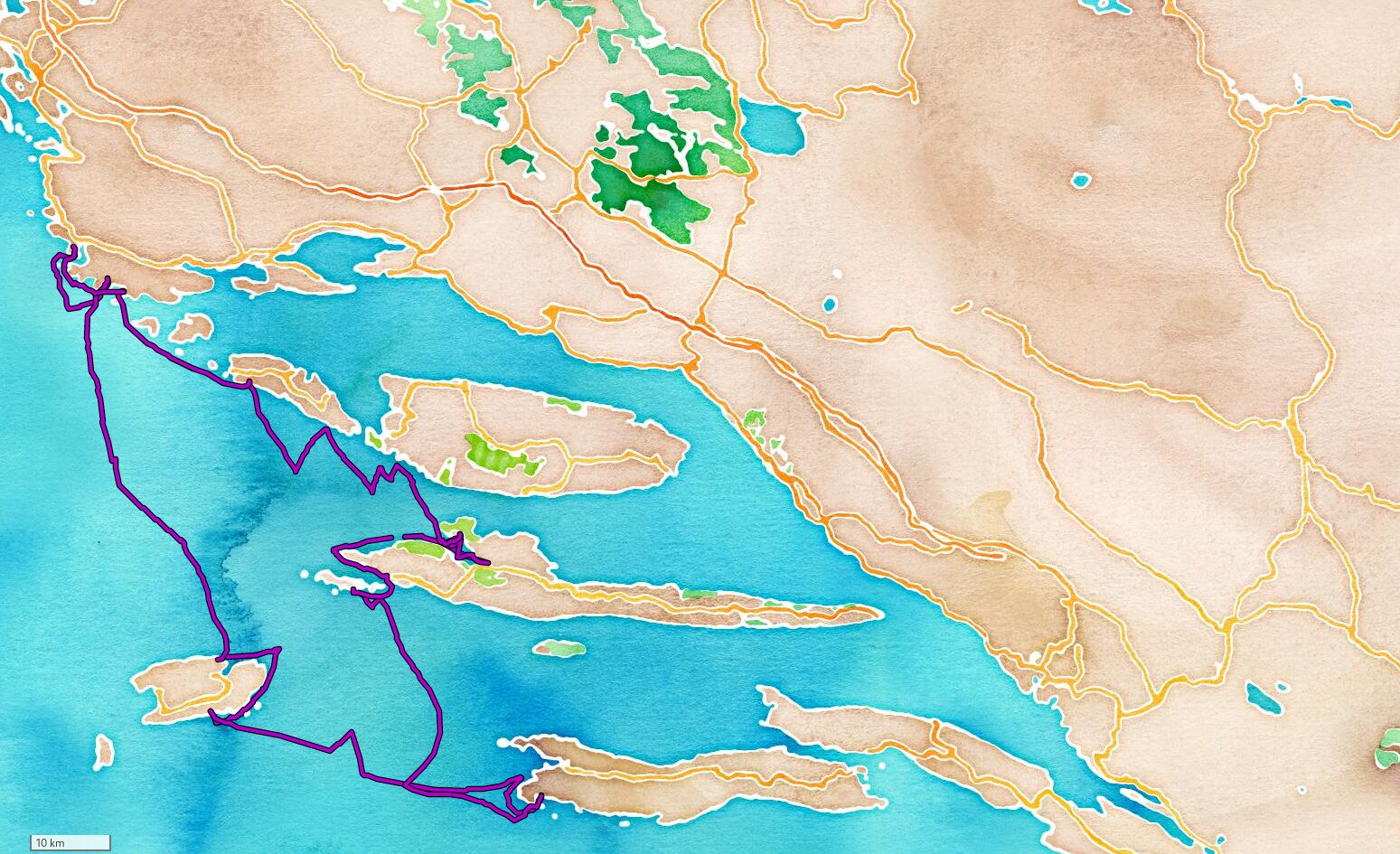

Map

Route stops and details:

- Start: Marina Frapa ↓

- End: Marina Frapa

- GPX

Trip Gallery

2020

Vodice 05.09.2020 - 12.09.2020

Trip summary:

- Distance: 87 NM

- Maximum speed: 7.1 kts

- Average speed: 3.1 kts

- Boat: Jeanneau Sun Odyssey 30 | Espresso 1

- Year: 2009

- Drought: 1.95m

- Length: 8.99 m

- Beam: 3.18 m

- Engine: 21 hp (15.7 kW)

- Fuel tank: 50 l

- Water tank: 160 l

- Rolling mainsail

Route details

Trip Gallery

Sailing Galleries

Veruda - Dragove - Rovijn - Veruda 2023

Pomer - Veli Rat - Pomer 2022

Frapa - Vis - Frapa 2021

Vodice - Zut - Vodice 2020

Filesystem

NFS with KRB5

Setup KDC:

- Install the required packages for the KDC.

[root@kdc-server ~]# dnf install krb5-libs krb5-server krb5-workstation

- Edit the

/etc/krb5.conf.

# To opt out of the system crypto-policies configuration of krb5, remove the

# symlink at /etc/krb5.conf.d/crypto-policies which will not be recreated.

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

pkinit_anchors = FILE:/etc/pki/tls/certs/ca-bundle.crt

spake_preauth_groups = edwards25519

dns_canonicalize_hostname = fallback

qualify_shortname = ""

default_realm = EXAMPLE.COM

default_ccache_name = KEYRING:persistent:%{uid}

[realms]

EXAMPLE.COM = {

kdc = kdc.example.com

admin_server = kdc.example.com

}

[domain_realm]

.example.com = EXAMPLE.COM

example.com = EXAMPLE.COM

- Create the database using the kdb5_util.

[root@kdc-server ~]# kdb5_util create -s

- Set ACL in the

/var/kerberos/krb5kdc/kadm5.acl. Bellow settings allows anyone with secodary admin principal to have full administrative access for example:user/admin@EXAMPLE.COM`

*/admin@EXAMPLE.COM *

- Create the first principal using kadmin.local at the KDC terminal:

[root@kdc-server ~]# kadmin.local -q "addprinc user/admin"

- Satrt krb5kdc and kadmin.

[root@kdc-server ~]# systemctl enable --now kadmin.service krb5kdc

NFS server configuration.

- Install packages for kerberos client and NFS server

[root@nfs-server ~]# dnf install krb5-workstation nfs-utils

- NFSv4 idmapping becomes much more important to have with Kerberos. Both the

server and the clients should have the same idmapping domain configured. In

the

/etc/idmapd.confset the domain to your kerberos realm.

[General]

Domain = example.com

- Each NFS server needs a Kerberos principal for

nfs/server.fqdnto be created on the KDC, and its keys added to the server’s /etc/krb5.keytab.

[root@nfs-server ~]# kadmin -p username/admin

Password for username/admin@EXAMPLE.COM: ***********

kadmin: addprinc -nokey nfs/nfs-server.example.com

kadmin: addprinc -nokey host/nfs-server.example.com

kadmin: ktadd nfs/nfs-server.example.com

kadmin: ktadd host/nfs-server.example.com

[root@nfs-server ~]# klist -ke

[root@nfs-server ~]# klist -ke

Keytab name: FILE:/etc/krb5.keytab

KVNO Principal

---- -------------------------------------------------------------------

1 host/nfs-server.example.com@EXAMPLE.COM (aes256-cts-hmac-sha384-192

1 host/nfs-server.example.com@EXAMPLE.COM (aes128-cts-hmac-sha256-128)

1 host/nfs-server.example.com@EXAMPLE.COM (aes256-cts-hmac-sha1-96)

1 host/nfs-server.example.com@EXAMPLE.COM (aes128-cts-hmac-sha1-96)

1 host/nfs-server.example.com@EXAMPLE.COM (camellia256-cts-cmac)

1 host/nfs-server.example.com@EXAMPLE.COM (camellia128-cts-cmac)

1 host/nfs-server.example.com@EXAMPLE.COM (DEPRECATED:arcfour-hmac)

1 nfs/nfs-server.example.com@EXAMPLE.COM (aes256-cts-hmac-sha384-192)

1 nfs/nfs-server.example.com@EXAMPLE.COM (aes128-cts-hmac-sha256-128)

1 nfs/nfs-server.example.com@EXAMPLE.COM (aes256-cts-hmac-sha1-96)

1 nfs/nfs-server.example.com@EXAMPLE.COM (aes128-cts-hmac-sha1-96)

1 nfs/nfs-server.example.com@EXAMPLE.COM (camellia256-cts-cmac)

1 nfs/nfs-server.example.com@EXAMPLE.COM (camellia128-cts-cmac)

1 nfs/nfs-server.example.com@EXAMPLE.COM (DEPRECATED:arcfour-hmac)

- Enable and start the gssproxy.service

[root@nfs-server ~]# systemctl enable --now gssproxy.service

NFS client configuration

-

Install the

nfs-utilsandkrb5-workstationas on the NFS server and create same configuration filekrb5.conf. -

Add nfs-client to the kerberos.

[root@nfs-server ~]# kadmin -p username/admin

Password for username/admin@EXAMPLE.COM: ***********

kadmin: addprinc -nokey host/nfs-client.example.com

kadmin: ktadd host/nfs-client.example.com

[root@nfs-server ~]# klist -ke

Benchmarks

Benchmark of NFSv4.2 with different security context.

Environment

NFS Server and KDC:

- OS: Fedora 40 Qemu KVM virtual machine.

- 20 CPUs Intel® Xeon® Gold 5215 CPU @ 2.50GHz.

- 250GB memory 100GB used for

/dev/pmem0emulation. - CPUs pinned on NUMA node 0 (NFS clients are pinned to NUMA node 1).

- All configs are left in default values including nfs.conf.

- NFS export is placed on XFS backing up

/dev/pmem0and not using DAX option. - Virtio isolated network.

NFS clients

- OS: version from RHEL 7 to RHEL 9 Qemu KVM

- 8 CPUs Intel® Xeon® Gold 5215 CPU @ 2.50GHz with 8GB of RAM.

- Virtio isolated network.

- Default NFS mount options:

fedora-kdc.example.com:/mnt/nfs /mnt/sys nfs4 rw,relatime,vers=4.2,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=192.168.0.2,local_lock=none,addr=192.168.0.1 0 0

fedora-kdc.example.com:/mnt/nfs /mnt/krb5 nfs4 rw,relatime,vers=4.2,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=krb5,clientaddr=192.168.0.2,local_lock=none,addr=192.168.0.1 0 0

fedora-kdc.example.com:/mnt/nfs /mnt/krb5i nfs4 rw,relatime,vers=4.2,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=krb5i,clientaddr=192.168.0.2,local_lock=none,addr=192.168.0.1 0 0

fedora-kdc.example.com:/mnt/nfs /mnt/krb5p nfs4 rw,relatime,vers=4.2,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=krb5p,clientaddr=192.168.0.2,local_lock=none,addr=192.168.0.1 0 0

Base line testing with follwing fio configuration:

# cat nfs_08k_rand_write.ini

[global]

direct=1

ioengine=libaio

bs=8k

gtod_reduce=1

size=2G

iodepth=128

rw=randwrite

group_reporting=1

numjobs=4

filename_format=/mnt/$jobname/nfs.$jobnum

[sys]

stonewall=1

[krb5]

stonewall=1

[krb5i]

stonewall=1

[krb5p]

stonewall=1

Results on the NFS server writing directly to the local filesystem. The stats on

diskstats are zero as /dev/pmem0 does not report anything to

/proc/diskstats.

[root@fedora-kdc ~]# fio xfs_08k_rand_write.ini

nfs: (g=0): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

fio-3.36

Starting 4 processes

Jobs: 4 (f=4): [w(4)][94.1%][w=7443MiB/s][w=953k IOPS][eta 00m:02s]

nfs: (groupid=0, jobs=4): err= 0: pid=8415: Thu Aug 15 11:22:14 2024

write: IOPS=331k, BW=2586MiB/s (2712MB/s)(80.0GiB/31677msec); 0 zone resets

bw ( MiB/s): min= 179, max= 7466, per=99.74%, avg=2579.39, stdev=829.78, samples=252

iops : min=22962, max=955700, avg=330161.65, stdev=106212.09, samples=252

cpu : usr=6.09%, sys=48.51%, ctx=695334, majf=0, minf=31

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwts: total=0,10485760,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=128

Run status group 0 (all jobs):

WRITE: bw=2586MiB/s (2712MB/s), 2586MiB/s-2586MiB/s (2712MB/s-2712MB/s), io=80.0GiB (85.9GB), run=31677-31677msec

Disk stats (read/write):

pmem0: ios=0/0, sectors=0/0, merge=0/0, ticks=0/0, in_queue=0, util=0.00%

RHEL 7:

- Encryption type: aes256-cts-hmac-sha1-96

sys: (g=0): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5: (g=1): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5i: (g=2): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5p: (g=3): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

Run status group 0 (all jobs):

WRITE: bw=179MiB/s (187MB/s), 179MiB/s-179MiB/s (187MB/s-187MB/s), io=8192MiB (8590MB), run=45878-45878msec

Run status group 1 (all jobs):

WRITE: bw=173MiB/s (182MB/s), 173MiB/s-173MiB/s (182MB/s-182MB/s), io=8192MiB (8590MB), run=47316-47316msec

Run status group 2 (all jobs):

WRITE: bw=130MiB/s (137MB/s), 130MiB/s-130MiB/s (137MB/s-137MB/s), io=8192MiB (8590MB), run=62825-62825msec

Run status group 3 (all jobs):

WRITE: bw=81.9MiB/s (85.8MB/s), 81.9MiB/s-81.9MiB/s (85.8MB/s-85.8MB/s), io=8192MiB (8590MB), run=100064-100064msec

RHEL 8:

- Encryption type: aes256-cts-hmac-sha384-192

sys: (g=0): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5: (g=1): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5i: (g=2): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5p: (g=3): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

Run status group 1 (all jobs):

WRITE: bw=176MiB/s (185MB/s), 176MiB/s-176MiB/s (185MB/s-185MB/s), io=8192MiB (8590MB), run=46488-46488msec

Run status group 2 (all jobs):

WRITE: bw=154MiB/s (161MB/s), 154MiB/s-154MiB/s (161MB/s-161MB/s), io=8192MiB (8590MB), run=53239-53239msec

Run status group 3 (all jobs):

WRITE: bw=152MiB/s (159MB/s), 152MiB/s-152MiB/s (159MB/s-159MB/s), io=8192MiB (8590MB), run=53974-53974msec

RHEL 9:

- Encryption type: aes256-cts-hmac-sha384-192

sys: (g=0): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5: (g=1): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5i: (g=2): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

krb5p: (g=3): rw=randwrite, bs=(R) 8192B-8192B, (W) 8192B-8192B, (T) 8192B-8192B, ioengine=libaio, iodepth=128

...

Run status group 0 (all jobs):

WRITE: bw=198MiB/s (208MB/s), 198MiB/s-198MiB/s (208MB/s-208MB/s), io=8192MiB (8590MB), run=41394-41394msec

Run status group 1 (all jobs):

WRITE: bw=204MiB/s (214MB/s), 204MiB/s-204MiB/s (214MB/s-214MB/s), io=8192MiB (8590MB), run=40156-40156msec

Run status group 2 (all jobs):

WRITE: bw=179MiB/s (187MB/s), 179MiB/s-179MiB/s (187MB/s-187MB/s), io=8192MiB (8590MB), run=45868-45868msec

Run status group 3 (all jobs):

WRITE: bw=151MiB/s (158MB/s), 151MiB/s-151MiB/s (158MB/s-158MB/s), io=8192MiB (8590MB), run=54363-54363msec

Overview

| OS | Filesystem | SEC | RANDOM WRITE 8k |

|---|---|---|---|

| Fedora 40 | XFS | – | 2586MiB/s |

| RHEL 7 | NFS | sys | 179MiB/s |

| RHEL 7 | NFS | krb5 | 173MiB/s |

| RHEL 7 | NFS | krb5i | 130MiB/s |

| RHEL 7 | NFS | krb5p | 81.9MiB/s |

| RHEL 8 | NFS | sys | 205MiB/s |

| RHEL 8 | NFS | krb5 | 176MiB/s |

| RHEL 8 | NFS | krb5i | 154MiB/s |

| RHEL 8 | NFS | krb5p | 152MiB/s |

| RHEL 9 | NFS | sys | 189MiB/s |

| RHEL 9 | NFS | krb5 | 182MiB/s |

| RHEL 9 | NFS | krb5i | 180MiB/s |

| RHEL 9 | NFS | krb5p | 162MiB/s |

Storage

Targetcli

Scripts for creating loopback disks

targetcli /loopback create wwn=naa.5000000000000000 \

for i in {00..16}; \

do lvcreate -y -n disk$i -L100G export; \

targetcli /backstores/block create dev=/dev/export/disk$i name=disk$i; \

targetcli /backstores/block/disk$i set attribute optimal_sectors=4096; \

targetcli /loopback/naa.5000000000000000/luns create \

storage_object=/backstores/block/disk$i; \

done

Input Oputput I/

Breef overview of current (2023) I/O mechanism supported Linux kernel.

Synchronous

Represented by system calls read()/write(), pread()/pwrite() and it’s vectored version of readv()/writev() and preadv()/pwritev().

Asynchronous

Represented either by POSIX aio (man aio), libaio and latest io_uring.

Buffered

All IO which ends up in page cache and are not directly written to but are written by the page background process.

Direct I/O:

With direct I/O data is read from or written to the storage device (e.g., HDD, SSD, NVMe) without being cached in the operating system’s buffer cache. This means that data is transferred directly between the application’s memory and the storage device, bypassing any intermediate caching layers in the operating system.

Benefits of Direct I/O:

-

Direct I/O is often used in scenarios where data consistency and control over I/O operations are critical, such as in databases, file systems, and some scientific computing applications.

-

It can help ensure that data is written to and read from the storage device without interference from the operating system’s cache, which can be especially important for applications that require strict data durability or real-time performance. By bypassing the cache, direct I/O can reduce the variability in I/O response times that can occur with cached I/O.

x86_64 REGISTERS

General-Purpose Registers

The 64-bit versions of the ‘original’ x86 registers are named:

- rax - register a extended

- rbx - register b extended

- rcx - register c extended

- rdx - register d extended

- rbp - register base pointer (start of stack)

- rsp - register stack pointer (current location in stack, growing downwards)

- rsi - register source index (source for data copies)

- rdi - register destination index (destination for data copies)

The registers added for 64-bit mode are named:

- r8 - register 8

- r9 - register 9

- r10 - register 10

- r11 - register 11

- r12 - register 12

- r13 - register 13

- r14 - register 14

- r15 - register 15

These may be accessed as:

- 64-bit registers using the r prefix:

rax,r15. - 32-bit registers using the e prefix or d suffix:

eax,r15d. - 16-bit registers using no prefix or a w suffix :

ax,r15w. - 8-bit registers using h (“high byte” of 16 bits) suffix:

ah,bh. - 8-bit registers using l (“low byte” of 16 bits) suffix or ‘b’ suffix:

al,r15b.

Usage during syscall/function call:

First six arguments are in rdi, rsi, rdx, rcx, r8d, r9d; remaining arguments are on the stack.

For syscalls, the syscall number is in rax. For procedure calls, rax should be set to 0. The called

routine is expected to preserve rsp, rbp, rbx, r12, r13, r14 and r15 but may trampleany other

registers. Return value is in rax.

GIT

Github notes.

Git hub submit changes to pull request:

- rebase

- force push

More verbose:

- changes

- commit,

- git rebase -i HEAD~2’ use ‘f’ to squash the new code into the previous one + the previous commit message

Docs

Nextcloud internals

Cleaning of the bruteforce IP

use nextcloud;

show tables;

select * from oc_bruteforce_attempts;

delete from oc_bruteforce_attempts where IP="xxx.xxx.xxx.xxx";